Paramilitary Telegram Leaks: From Dump To Data

The other day I was perusing Mastodon, as one does, and noticed a post from journalist and author Micah Lee saying he was exploring a 200GB dump from American paramilitary groups.

This leak was made possible through the incredible bravery of John Williams who infiltrated these groups and exfiltrated all this data. He is currently crowdfunding $10k in part, to "move safe houses and to change his identity" and he also has a Patreon where you can support him

I hadn't heard of such a dump before, and immediately sent Micah an email offering to help with any dataviz.

From SQLite to Parquet: Processing Telegram Leaks for Fast Web Visualization

The Challenge

- Extract the relevant data

- Convert it to a web-friendly format

- Make it quickly accessible for visualization

- Cache it for fast loading across browsers

The Workflow

We start with the repo Micah started that takes the raw dump of .html exports from Telegram and transforms them into a structured sqlite db we can work with.

Once you get that repo setup you can just do

poetry run tasks build-db ~/RAW-LEAK-LOCATION`… and you get a sqlite database in your output folder.

Step 1: Export from SQLite to CSV

First, I extract the messages table to a .csv using SQLite's command-line tool:

sqlite3 -header -csv output/data.db 'select * from messages;' > output/telegram_chats.csvThis gives us a clean CSV file with all our message data, ready for the next transformation- we could also play around with something like csvkit or visitdata

Step 2: Convert CSV to Parquet

Parquet is a columnar storage format that's incredibly efficient for analytical queries and works brilliantly in browser environments. I use DuckDB (an in-process analytical database) to handle the conversion:

duckdb -c "INSTALL parquet; LOAD parquet; CREATE TABLE temp AS SELECT * FROM read_csv('output/telegram_chats.csv'); COPY temp TO 'output/telegram_chats.r5.parquet' (FORMAT PARQUET, COMPRESSION 'SNAPPY');"The compression with Snappy ensures it's both compact and fast to decompress.

Step 3: Host on R2 with Cloudflare Caching

To make the data available globally with minimal latency (and cost to me) we throw it on Cloudflare R2 for hosting

# R2 Configuration

R2_PARQUET_URL=https://r2.ejfox.com/para-leaks/telegram_chats.r5.parquet

The Frontend

I've developed a Nuxt / regl-scatterplot frontend application to interact with this data, available on GitHub:

https://github.com/ejfox/paramilitary-leaks-frontend

You can view it the front-end https://para-leaks.ejfox.com

Data Exploration Examples

Now that we've chopped and screwed our data between sqlite, parquet, and .csv – depending on what application we want to use it for, we can start asking interesting questions from the sqlite database with datasette.

For example, to find the top senders of media you could run:

select id, timestamp, sender, text, media_note, media_filename, filename, group_chat_id

from messages

where "media_filename" is not null

order by media_filename desc

limit 101

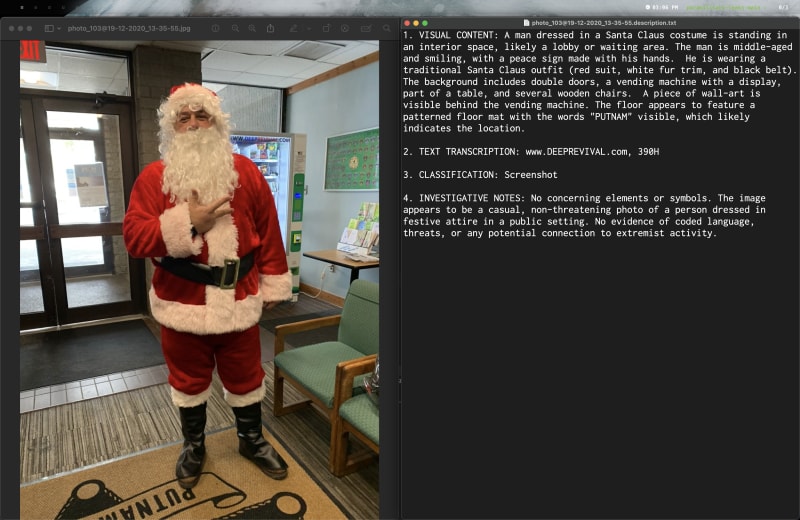

Because so much information is in these media files, I took a stab at having Gemini write data transcriptions of each photo so they could be indexed for text search later.

This experiment went pretty well, but I shelved it to come back to, there's enough to analyze here without involving LLMs and prompt engineering.

Building Custom Visualizations

The real power of this approach is that we can build all sorts of different front-ends or visualizations on top of this data. The Parquet format is super-fast in the browser, making it ideal for interactive visualizations. We've taken a 200GB leak and turned it into something we can explore in the browser.

Some ideas I'm exploring:

- Timeline visualizations of message frequency

- Network graphs of user interactions

- Text analysis and keyword extraction

- Sentiment analysis over time

For more information on this topic, I recommend reading Micah Lee's excellent article:

Exploring the Paramilitary Leaks

ProPublica: The Militia and the Mole (Jan. 4, 2025)